In the last post we had gotten through obtaining a list of files (web addresses and descriptions) to download & extract data from. Let’s take a look at one of these.

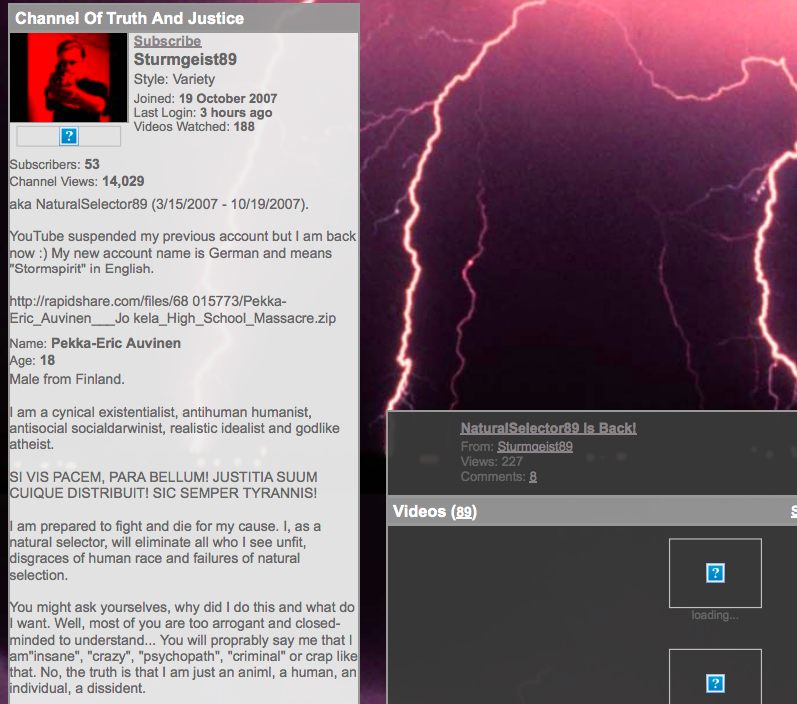

Here we have an image of Pekka-Eric Auvinen’s former YouTube profile. Recall that we are seeking mentions of other shooters and their crimes.

While there is no overt, formal naming of another shooter, someone familiar with school shootings will recognize “Natural Selection” as a catchphrase of Eric Harris, the Columbine school shooter. However the actual phrase “Natural Selection” does not appear– instead, we see that it would be preferable in some cases to use a more general keyword such as “natural + [possible space] + select” (since it could be selectION, selectOR, etc). This generalization will be ideal in several instances. We may also notice the term “godlike” which is another Harris/Columbine meme. Fortunately for us, there are a limited number of shooters with such a substantial “fan following” among other shooters that their speech patterns are reproduced in endless combinations and permutations as in-jokes or references; most of these come from Columbine. In short, we should take note to include non-name references in the name search list as though they were nicknames for the shooters.

We may also notice some socio-political buzzwords that may be of interest later, when we are looking into ideological similarities between shooters other than direct influence. I see “sturm”/”storm” (can be a white nationalist metaphor), “atheist”, “humanist”, etc., as well as loaded descriptions of the shooting action, such as “eliminate” and “my cause” (shooters often reference their “justice day”, “wrath”, “final action”, or whatever). We should start a list of interesting terms to consider later.

We may also note that this is a pdf image and as such we cannot yet ask our computer to search it as text. We will need to obtain searchable text from our PDFs. I didn’t know about this before this project, but apparently there are a few basic types of PDFs:

1) “Digitally created PDFs” / “True PDFS”: PDFs created with software that gives them internal meta-information designating the locations of text, images, and so on: in a sense, the computer can “see” what the naked eye can see due to this internal structure, and there is preexisting, GUI-having, user-friendly software to navigate these documents with ease manually one at a time (or of course you could automate their navigation yourself in such a manner as you desire; I’m just saying that they’re broken down to the point that they’re already cracked open and layed out like a multicourse meal for a layperson to consume– if they have the appropriate tools onhand).

2) PDFs that are just images, such as a raw scan of an original document of any kind, or of a picture, or of handwriting, etc. Just any old thing: a picture of it. There is no internal structure to help your computer navigate this, it’s “dead” in its current state. This is the worst case (aka the fun case for us; you’ll see!).

3) PDFs that have been made searchable through application of (e.g.) OCR to an image PDF, yielding text that is then incorporated into the original document as an underlayer. This text can be selected, copied, etc. This is a good situation. In our case, when we OCR image files, we’ll just go ahead and save the text in a file by itself (one per source image PDF) rather than creating searchable PDFs– because that’s all we need!

This is a case of #2– just a plain ol’ screenshot that someone took of this YouTube profile.

Now, this is a relatively small document in terms of the text contained within it– if I had a transcription of this text, it wouldn’t kill me to just read through it and see for myself if anything notable is contained therein. However, besides the sheer number of documents, a lot of the documents we’re going to be dealing with are these really interminable, deadly-dry court documents or FBI compendium files that are just hundreds and in some cases (cough, Sandy Hook shooting FBI report) thousands of pages long– fine reading for a rainy day or when I’m sick in bed or something, but not something I want to suffer through on my first attempt to get a high-level glance at who’s talking about whom.

(Seriously, some of the content of these things– there’s stuff like the cops doing the interrogations squabbling about when they’re going on their lunch break and “Wilson, stop fiddling with the tape while I’m interviewing!” and people going back and forth about whether they just properly translated the question to the witness or whether they just asked her about a pig’s shoes by mistake, etc.– and Valery Fabrikant representing himself in trial– merciful God! I’m going to have to do a whole separate post on the comic relief I encountered while going through these, both in terms of actually funny content and in terms of stuff that my computer parsed in a comically bogus way, such as when someone’s love of Count *Cho*cula gave me a false positive for a reference to Seung-Hui *Cho*.) Point being, I’m not gonna do this, I’m gonna make my computer do it. So that’s gonna be half the battle, namely, the second half.

First half of the battle is going to be getting the text out of the PDF. Enter optical character recognition (OCR). OCR is, in short, your computer reading. So let’s back it up– when you’re looking at text in some program on your computer, you’re looking at what’s really a manifestation of an underlying numerical representation of that character (meaning your computer knows two different “A”s are both capital As in the sense that they both “mean” [such-and-such number]). It’s not trying to figure it out from the arrangement of pixels of the character in that font every single time. (Honestly I don’t feel I have enough experience in this area to judge an appropriate summary of the appropriate “main” topics, so I’m just going to link out to someone else and you can read more if you like.)

But when the computer is looking at a picture of someone’s handwriting, or a picture of printed-out text from another computer, it’s only seeing the geometric arrangement of the pixels; it doesn’t yet know where the letters stop and start, or which number to associate a written character with once it is isolated. So what would you do if someone in a part of the world that used a totally unfamiliar alphabet slid you their number scrawled on a napkin (which they’d set their drink on, leaving a big wet inky ring)? First you’d try to mentally eliminate everything that’s not even part of the number– any food, dirt, wet spots, etc. Then you’d try to separate out groupings of marks that constitute separate numbers (like how the word “in” has the line part and the dot part that together make up the letter i– that’s one group– and then the little hump part that constitutes the n– that’s the second group). Then you’d zoom in on the grouping that you had decided made up one numerical digit, and you’d look back and forth between that and a list of all the one-digit numbers in that language in some nice formal print from a book that you are satisfied is standard and “official enough”. So you’d start with the first number in the list, and compare it to your number. Now you could go about this comparison a couple of ways.

1) Draw a little pound sign / graph over your number and over the printed number (imagine a # over the number 5). Compare the bottom left box of your number to the bottom left box of the printed number. Plausibly the same or not at all? Then compare the bottom right box. Etc. Apply some standard of how many boxes have to be similar to decide it’s a match, and when you find a match in the list of printed numbers, stop (or, do the comparison algorithm for each entry in the printed list, and whichever printed number has the most similar boxes to your written number is picked as the answer).

There are some problems with this though, namely that things might be tilted, different sizes, written with little flourishes on the ends of the glyphs, etc. in such a way that on a micro level, the similarities are disguised: think of the “a” character as typed in this blog vs. the written “a” character as is taught in US primary school (basically looks like an “o” with the line part of an “i” stuck on the right-hand side). The written, elementary-school-“a” would likely be determined to be an “o” under the # system. Not good. This is called matrix matching.

2) Attempt to identify the major parts, or “features”, of the character. (For example, we will consider the line and the dot in an “i” to be separate features because they’re separated in space, or the lines in an “x” as individual features as they can be drawn in one stroke, or whatever.) For the “a” we have a round part, and on its left a vertical line of some sort. Okay, now we’re talking generally enough that the two “a”s described above sound pretty similar. This is called feature recognition. (As you can imagine, it gets pretty complicated to get a computer to decide how to look for features and what’s a feature.)

So, that’s the game. There are several “engines” / programs / packages / etc for performing this task, and I used Tesseract. It’s pretty great at reading all kinds of *typed* characters, but you have to train any engine to read handwriting (one handwriting at a time, by slowly feeding it samples of that writing so it can learn to recognize it). I had so many different people’s handwritings, and so few handwritten documents PER handwriting, that this didn’t seem like the project to get into that on. I’m definitely going to get back to that for the purposes of transcribing my own handwriting, as I write poetry and prose poetry longhand and have a huge backlog of notebooks to type up (securing all that data is one of my main outstanding life tasks, in fact– there’s really no excuse at this point to endanger all of my writing by leaving it in unbacked-up physical copies).

This post is getting a little long so I’m going to go ahead and put it up and get into the technical stuff in the next post. Peace!