As discussed in my previous post, I’ve been mass-downloading and automatedly searching rampage shooters’ manifestos, court documents, etc. for mentions of the other shooters (by name, event, associated symbols, and so on). For this I used a Python library called Beautiful Soup, and I’d like to say a few words about how the process goes.

What is Beautiful Soup?

Beautiful Soup (library and documentation available at link) self-describes as “a Python library for pulling data out of HTML and XML files. It works with your favorite parser to provide idiomatic ways of navigating, searching, and modifying the parse tree. ” It is currently in version 4 (hence I’ll refer to it as BS4 from here on), but it is possible to port code written for version 3 without much trouble.

What do we want from it?

To put it as broadly/simply as possible, we want to take a complete website, that we find out there on the internet, and turn it into an organized set of items mined from within that website, throwing back all the stuff that we don’t want. So for example in my project, a major source of these documents was Peter Langman’s database of original documents pertaining to mass shootings. Here it is “normally” and here it is “behind the scenes”. [pic 1, pic 2] Not only am I going to want to download all of these documents and leaf through them, but I’ll need to do so in an organized fashion. So a good place to start might be:

Goal: obtain a list or lists of shooters and their associated documents available in this database.

Using Beautiful Soup

Basically, how it works is that we assign a webpage’s code to be the “soup” that BS4 will work with. We allow BS4 to process the “soup” in such a way that BS4 will be able to navigate through it using the site’s HTML tags as textual search markers, and to pull out (as strings) whichever types of objects we want. from bs4 import BeautifulSoup site = "https://schoolshooters.info/original-documents"

page = urllib2.urlopen(site)soup = BeautifulSoup(page)

Unprettified soup.

I myself chose urllib2. BeautifulSoup (and later prettify) are commands built into the BS4 library. So, we’ve set the scene to allow us to move through the HTML/text, some examples below (these and more can be seen at the BS4 Documentation page).

We can pull out parts of the (prettified) soup by specifying tags, for example:

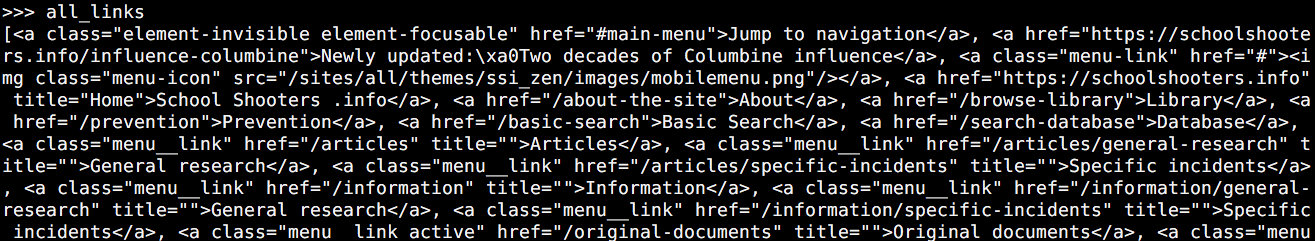

soup.prettifyall_links = soup.find_all("a")

Everything with an “<a>” tag.

Pulling out all the “href” elements within these “<a>” tags…templist = list()

for link in all_links:

templist.append(link.get("href"))

One can work with nested tags as well by iterating the same procedure.

Unfortunately this is still not my desired list of links, but a simple script of the type below can filter for the appropriate strings and create a file listing them.

f = open('linkslist.txt','w+') for item in templist: if "http" in item: f.write(item + "\n")

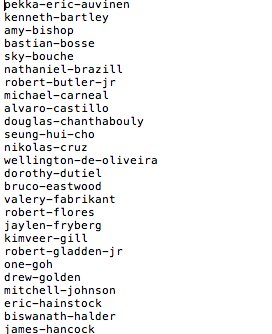

yields a document such as below. Included are a couple other samples of basic ways one could have fished-out data written to documents. Please note that more documents were added to the database after I began this project, so e.g. the William Atchison files are not included here even though they appear in the “soup” pictures. I’ll synchronize the images later.

So now we’ve managed to extract some data that would have taken much longer to do by hand! Next on the agenda will be mass-downloading my desired files (and avoiding undesired ones) and crawling them for cross-referencing– while avoiding booby traps! See you in the next post.